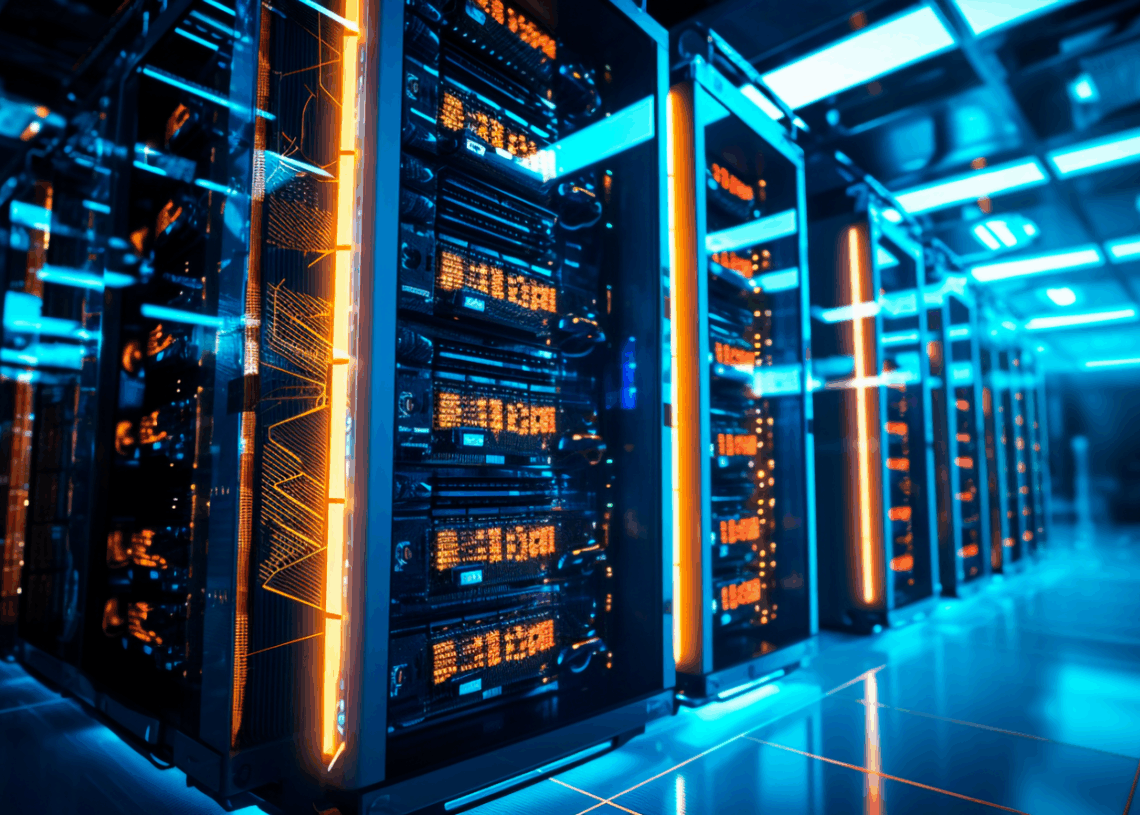

In an era dominated by the ceaseless flow of digital information, the hyperscale data center stands as the silent, powerful engine of the modern world. From streaming movies and powering global finance to enabling artificial intelligence and the Internet of Things (IoT), these colossal facilities are the bedrock of our digital existence. However, with immense power comes immense responsibility—and an equally immense operational cost. The quest for optimizing hyperscale efficiency is not merely a technical challenge; it is an economic and environmental imperative.

As reliance on cloud services explodes, the energy consumption of these facilities has come under intense scrutiny. Optimizing their efficiency is paramount to managing operational expenditures (OpEx), ensuring sustainable growth, and mitigating environmental impact. This comprehensive article delves into the multi-faceted strategies and cutting-edge technologies being deployed to push the boundaries of hyperscale efficiency. We will explore everything from foundational metrics and innovative cooling solutions to custom hardware and the intelligent software layer that orchestrates it all. This is the definitive guide to understanding how the giants of the tech world are building a faster, cheaper, and greener digital future.

The Hyperscale Paradigm: A New Class of Computing

Before diving into optimization, it’s crucial to understand what makes a data center “hyperscale.” It’s a classification that goes far beyond mere size. While these facilities are indeed massive, often spanning hundreds of thousands or even millions of square feet, their defining characteristics lie in their architecture and operational philosophy.

A hyperscale data center is characterized by its ability to scale horizontally with massive agility, adding thousands of servers and vast amounts of storage with minimal disruption. They are built on principles of homogeneity and automation. Instead of using a variety of different servers and networking gear from multiple vendors, hyperscalers deploy vast fleets of identical, custom-designed, commodity hardware. This uniformity simplifies management, reduces costs, and allows for unprecedented levels of automation in provisioning, monitoring, and maintenance. Companies like Google, Amazon (AWS), Microsoft (Azure), and Meta (Facebook) are the pioneers and primary operators of these facilities, designing their infrastructure from the ground up to support their specific, massive-scale workloads.

Core Pillars of Hyperscale Efficiency Optimization

Achieving peak efficiency in such a complex environment requires a holistic approach that addresses every component of the data center stack. The strategy can be broken down into several key pillars, each offering significant opportunities for improvement.

A. Power Infrastructure Efficiency: This involves minimizing the energy lost in the process of delivering electricity from the grid to the IT equipment. It is the most fundamental layer of data center efficiency.

B. Cooling and Thermal Management: A significant portion of a data center’s energy consumption is dedicated to removing the waste heat generated by servers. Innovations in cooling have a direct and substantial impact on overall efficiency.

C. Compute and Hardware Optimization: This focuses on maximizing the computational output for every watt of power consumed. It involves custom silicon, innovative server designs, and high-density deployments.

D. Software, Automation, and AIOps: The intelligent layer that manages workloads, provisions resources, and predicts failures. Advanced software can dynamically adjust infrastructure to match demand, eliminating waste and improving performance.

E. Sustainability and Environmental Metrics: Beyond just electricity, modern efficiency now includes metrics for water usage, carbon emissions, and the lifecycle management of hardware, reflecting a broader commitment to environmental stewardship.

Deconstructing Power Efficiency: Beyond PUE

For over a decade, the primary metric for measuring data center infrastructure efficiency has been Power Usage Effectiveness (PUE). It provides a simple, effective benchmark for understanding how much energy is being “wasted” on non-IT functions like cooling and power distribution.

The formula is straightforward:

PUE=IT Equipment EnergyTotal Facility Energy

A perfect PUE of 1.0 would mean that 100% of the energy entering the data center reaches the IT equipment, with nothing spent on overhead. While the industry average hovers around 1.5, hyperscalers consistently achieve PUEs below 1.2, with some of the most advanced facilities dipping below 1.1. This represents a monumental achievement in engineering.

Achieving such low PUE values requires a meticulous focus on the entire power chain:

A. High-Efficiency Uninterruptible Power Supplies (UPS): Modern UPS systems, particularly those operating in “eco-mode,” can achieve efficiencies exceeding 99%. They are critical for ensuring clean, consistent power while minimizing energy loss.

B. Optimized Power Distribution: Hyperscalers often design their power distribution units (PDUs) and busbars to minimize resistive losses over the vast distances within the facility. Some are even moving towards higher voltage distribution to reduce current and, consequently, heat loss.

C. Direct Current (DC) Power: While most data centers run on Alternating Current (AC), some hyperscalers have experimented with and deployed DC power distribution. This approach eliminates some of the AC-to-DC conversion steps required by server power supplies, potentially trimming a few crucial percentage points of energy loss.

However, PUE has its limitations. It doesn’t account for the efficiency of the IT equipment itself or the facility’s environmental impact. This has led to the development of complementary metrics like Water Usage Effectiveness (WUE) and Carbon Usage Effectiveness (CUE), which are becoming increasingly important in the age of sustainable computing.

The War on Heat: Advanced Cooling Technologies

Servers generate immense heat, and managing this thermal load is arguably the single greatest challenge in data center design. Inefficient cooling can easily become the biggest drain on a facility’s power budget. Hyperscalers have moved far beyond traditional computer room air conditioning (CRAC) units, embracing a suite of innovative strategies.

A. Free Air Cooling (Economization): The most energy-efficient method is to use the outside air for cooling whenever possible. In suitable climates, a data center can use ambient air—either directly or through an air-to-air heat exchanger—for a significant portion of the year. This dramatically reduces the need for energy-intensive mechanical chillers. Google has famously championed this approach, designing facilities to maximize their use of outside air.

B. Hot/Cold Aisle Containment: This is a foundational data center design principle. By physically separating the cold air intake of servers from their hot air exhaust, containment prevents the mixing of hot and cold air. This simple concept dramatically increases the efficiency of any cooling system, allowing for higher temperature setpoints and reduced fan speeds.

C. Liquid Cooling: As server rack densities continue to increase, air is reaching the limits of its ability to effectively remove heat. Liquid, being far more thermally conductive than air, is the next frontier. There are two primary approaches: 1. Direct-to-Chip (DTC) Cooling: In this method, liquid is piped directly to a cold plate attached to the hottest components on the motherboard, such as the CPU and GPU. The liquid absorbs the heat and carries it away to be cooled, a much more efficient process than filling an entire room with cold air. 2. Immersion Cooling: This is the most radical approach. Entire servers are submerged in a non-conductive, dielectric fluid. This eliminates the need for server fans entirely and provides the most efficient possible heat transfer. Two-phase immersion, where the fluid boils on the surface of components and condenses on a lid, offers even higher performance and is being explored for extreme-density AI and high-performance computing (HPC) workloads.

D. AI-Driven Thermal Management: The ultimate layer of cooling optimization comes from software. Companies like Google are using sophisticated AI and machine learning models, fed by thousands of sensors throughout the data center, to create a “digital twin” of their thermal environment. This AI can predict how changes—like a shift in weather or a change in IT load—will affect temperatures and proactively adjust cooling systems for optimal efficiency, often achieving results that are impossible for human operators to replicate.

Hardware Innovation: Maximizing Performance-per-Watt

The efficiency of the IT equipment itself is a critical part of the equation. A low PUE is meaningless if the servers are inefficient. Hyperscalers have taken control of their hardware destiny, moving away from off-the-shelf equipment to custom designs tailored to their specific needs.

A. Custom Silicon and Accelerators: General-purpose CPUs are not always the most efficient tool for the job. Hyperscalers are designing their own Application-Specific Integrated Circuits (ASICs) and leveraging Field-Programmable Gate Arrays (FPGAs) to accelerate specific workloads. Google’s Tensor Processing Unit (TPU) for AI workloads and AWS’s Nitro System for virtualization and security are prime examples of custom silicon delivering massive performance and efficiency gains.

B. The Open Compute Project (OCP): Initiated by Meta, the OCP is a collaborative community dedicated to redesigning data center hardware to be more efficient, flexible, and scalable. OCP designs often feature minimalist, “vanity-free” servers that strip out unnecessary components. They also pioneered disaggregation, where components like storage, networking, and compute are separated into their own modules, allowing for independent scaling and upgrades, which reduces waste.

C. High-Density Racks: By packing more compute power into each rack and square foot, hyperscalers can reduce the overall facility footprint. This reduces the energy needed for lighting, cooling distribution, and other building overheads. Technologies like liquid cooling are essential enablers of these ultra-high-density deployments.

The Intelligent Layer: Software-Defined Everything (SDx) and AIOps

Hardware provides the potential for efficiency, but software unlocks it. The scale of a hyperscale facility makes manual management impossible. Automation and intelligent orchestration are not optional; they are fundamental.

A. Software-Defined Networking (SDN): SDN decouples the network’s control plane from its data plane. This allows administrators to manage the entire network as a single, cohesive entity through software. They can dynamically allocate bandwidth, route traffic to avoid congestion, and provision services in seconds, ensuring the network is always running at peak efficiency.

B. Software-Defined Storage (SDS): Similar to SDN, SDS abstracts storage resources from the underlying hardware. This provides immense flexibility, allowing for features like thin provisioning (allocating space only as it’s needed) and automated tiering (moving data between fast and slow storage based on access patterns), both of which contribute to cost and energy efficiency.

C. Infrastructure as Code (IaC): This is the practice of managing and provisioning infrastructure through machine-readable definition files, rather than physical hardware configuration or interactive configuration tools. IaC enables complete automation, ensuring that every server and virtual machine is deployed in a consistent, optimized state, eliminating configuration drift and manual errors.

D. AIOps (AI for IT Operations): This is the application of machine learning and data analytics to automate and improve IT operations. AIOps platforms can ingest telemetry data from millions of sources across the data center to perform predictive analytics. They can anticipate hardware failures before they occur, predict workload spikes to pre-emptively scale resources, and identify “zombie servers”—servers that are powered on but doing no useful work—allowing them to be decommissioned.

The Future: A Continuous Journey of Innovation

The quest for hyperscale efficiency is far from over. As new technologies emerge, they present both challenges and opportunities. The explosion of AI has led to a surge in demand for power-hungry GPUs, putting renewed pressure on cooling and power systems. At the same time, that very same AI is providing the tools to manage these complex environments with greater intelligence than ever before.

Looking ahead, we can expect continued innovation in areas like optical interconnects for faster, more efficient data transfer, the maturation of immersion cooling as a mainstream technology, and a deeper integration of sustainability metrics into the core operational dashboards of every data center. The hyperscale data center will continue to evolve, driven by the relentless pursuit of a simple but powerful goal: to deliver infinite digital resources with finite physical means.