The digital age, built upon the simple binary logic of 0s and 1s, has propelled humanity to unimaginable heights. From global communication networks to the supercomputers in our pockets, classical computing has defined the last century. Yet, as we push the boundaries of science, engineering, and data, we are beginning to encounter problems of such staggering complexity that even the most powerful supercomputers of tomorrow would take millennia to solve. This is where the story of classical computing reaches its theoretical limit, and a new, almost fantastical, chapter begins: the era of quantum computing.

We are now standing at a pivotal threshold. The conversation around quantum is shifting from the esoteric realms of theoretical physics to the tangible world of engineering and enterprise. This transition is being driven by the emergence of “Quantum Ready Hardware,” a term that signifies more than just the quantum processors themselves. It represents the entire ecosystem of technologies—from cryogenic cooling systems to specialized control electronics and hybrid classical-quantum architectures—that are making quantum computation a practical reality. This article delves into the dawn of this quantum-ready age, exploring the diverse hardware platforms leading the charge, the profound implications for industry and security, and the essential steps we must take to prepare for a revolution that is no longer a distant dream, but an impending reality.

Unlocking a New Universe: A Quantum Primer

To appreciate the hardware, one must first grasp the radical principles it is built upon. A classical computer stores and processes information using bits, which can exist in one of two definite states: either 0 or 1. It is a world of absolutes. Quantum computing, however, operates in a world of probabilities and interconnectedness, leveraging the strange and wonderful laws of quantum mechanics.

Its fundamental unit is the qubit, or quantum bit. Unlike a classical bit, a qubit can be a 0, a 1, or both simultaneously. This mind-bending property is called superposition. Imagine a spinning coin; until it lands, it is neither heads nor tails, but a blend of both possibilities. A qubit is like that spinning coin, holding a spectrum of potential values until it is measured. This ability to exist in multiple states at once allows quantum computers to process a vast number of calculations in parallel. Two qubits can represent four states simultaneously, three can represent eight, and so on. As you add more qubits, this computational space grows exponentially, quickly dwarfing the capabilities of any classical computer.

The second core principle is entanglement. Described by Einstein as “spooky action at a distance,” entanglement is a profound connection between two or more qubits. Once entangled, the state of one qubit is instantly linked to the state of the other, no matter how far apart they are. If you measure one and find it to be a 0, you instantly know the other is also a 0 (or a 1, depending on how they were entangled). This interconnectedness creates powerful correlations that allow for highly complex algorithms, enabling quantum computers to solve problems that are intractable for their classical counterparts. It is this combination of superposition and entanglement that gives quantum hardware its revolutionary potential.

The Contenders: A Survey of Emerging Quantum Hardware

The race to build a scalable, fault-tolerant quantum computer is not a monolithic effort. It is a vibrant and competitive field with several different physical approaches, each with its own unique strengths and immense engineering challenges. These are not merely theoretical models; they are physical systems being built and tested in labs and data centers around the world.

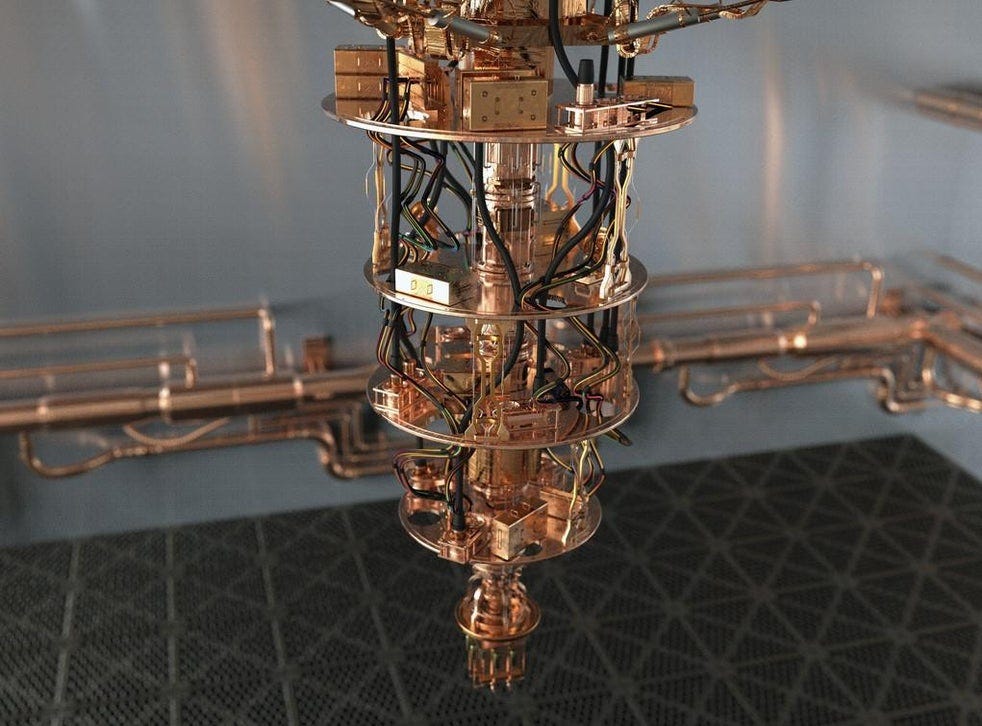

A. Superconducting Qubits: The Speed Demons Perhaps the most well-funded and widely publicized approach, superconducting qubits are the platform of choice for tech giants like Google and IBM. These are not naturally occurring particles but rather tiny, man-made electrical circuits fabricated from superconducting materials like niobium and aluminum. When cooled to temperatures colder than deep space—just fractions of a degree above absolute zero (-273.15°C or 0 Kelvin)—these circuits exhibit quantum properties.

- How They Work: Electrical currents flow through these circuits without any resistance. The quantum states (0, 1, and superpositions) are represented by the energy levels of microwave photons interacting with these circuits. Applying precise microwave pulses allows scientists to control the qubit’s state, put it into superposition, and entangle it with others.

- Advantages: Their primary advantage is speed. The gate operations—the fundamental manipulations of the qubits—are incredibly fast, typically measured in nanoseconds. They are also based on established semiconductor fabrication techniques, which researchers hope will aid in scaling up the number of qubits on a single chip.

- Challenges: The biggest hurdle is their fragility. Superconducting qubits are extremely sensitive to their environment. Any external “noise,” such as tiny fluctuations in temperature, radiation, or electromagnetic fields, can cause the delicate quantum state to collapse in a process called decoherence. This leads to errors in computation. To combat this, they must be housed in large, complex dilution refrigerators, making them expensive and difficult to maintain.

B. Trapped-Ion Qubits: The High-Fidelity Champions A leading alternative to superconducting systems, the trapped-ion approach uses nature’s own qubits: individual atoms that have been stripped of an electron, giving them a positive charge (making them ions).

- How They Work: These ions, typically of elements like ytterbium, are confined in a vacuum chamber and held in place by powerful electromagnetic fields, essentially creating a “trap.” Once trapped, they are manipulated with extreme precision using lasers. Different laser pulses can nudge the ion into various energy states, which serve as the 0 and 1 of the qubit.

- Advantages: Trapped-ion qubits are renowned for their stability and high fidelity. Because they are identical, naturally occurring particles suspended in a vacuum, they are less prone to the manufacturing defects that can plague solid-state qubits. They boast very long coherence times, meaning they can hold their quantum state for seconds or even minutes, which is orders of magnitude longer than most superconducting qubits. This stability results in fewer errors.

- Challenges: The trade-off for this high fidelity is speed. The laser manipulations required to perform gate operations are significantly slower than the microwave pulses used for superconducting qubits. Furthermore, while scaling by adding more ions to a single trap is difficult, companies like IonQ and Quantinuum are pioneering modular architectures, aiming to connect multiple individual quantum cores to build more powerful machines.

C. Photonic Quantum Computing: The Room-Temperature Hopefuls A radically different and intriguing approach is to use particles of light, or photons, as qubits. This method sidesteps one of the biggest challenges facing the other leading modalities: extreme cold.

- How They Work: In a photonic quantum computer, a single photon’s properties, such as its polarization (the orientation of its oscillation) or its path through an optical circuit, can represent the qubit’s state. Superposition is achieved by passing the photon through a beam splitter, which puts it into a state of traveling down two paths at once.

- Advantages: The most significant benefit is that photonic processors can operate at room temperature, eliminating the need for bulky and expensive cryogenic equipment. They are also largely immune to the kind of decoherence that affects matter-based qubits. Furthermore, they can leverage the decades of investment and infrastructure from the telecommunications and silicon photonics industries.

- Challenges: Working with photons is notoriously difficult. Unlike ions or superconducting circuits, photons don’t naturally interact with each other, which makes creating the crucial two-qubit entanglement gates a major engineering feat. They are also susceptible to being lost or absorbed as they travel through the optical components, which is a primary source of error.

D. Other Promising Modalities The field is rich with other innovative ideas, including neutral atom qubits, which are similar to trapped ions but use uncharged atoms held by optical tweezers; silicon spin qubits, which aim to integrate quantum dots directly into traditional silicon; and the highly theoretical but potentially revolutionary topological qubits, which Microsoft is pursuing, that promise to be inherently protected from errors.

The Ecosystem: More Than Just the QPU

The term “quantum ready hardware” extends far beyond the Quantum Processing Unit (QPU) itself. A functioning quantum computer is a symphony of cutting-edge classical and quantum technologies working in perfect harmony.

A. Cryogenic Infrastructure: For superconducting and some other platforms, the ability to achieve and maintain near-absolute zero temperatures is non-negotiable. This requires multi-stage dilution refrigerators, complex systems of pumps, and advanced thermal shielding. The companies that build this cryogenic hardware are as vital to the quantum ecosystem as the chip designers.

B. Control and Measurement Electronics: Each qubit, regardless of its type, needs to be precisely controlled and measured. This requires a sophisticated rack of classical hardware that can generate and send extremely precise analog signals (microwaves or lasers) to the QPU and then interpret the faint signals that come back. Managing the wiring and signal integrity for thousands or eventually millions of qubits is a monumental engineering challenge that companies are actively working to solve, often by designing custom ASICs (Application-Specific Integrated Circuits) that can operate at cryogenic temperatures alongside the QPU.

C. Quantum-Classical Hybrid Systems: In the current era, known as the Noisy Intermediate-Scale Quantum (NISQ) era, quantum computers are not standalone devices. They act as powerful co-processors in a hybrid system. A classical computer handles the overall workflow, prepares the data, sends instructions to the QPU, and then receives and interprets the probabilistic results from the quantum computation. The software and networking that seamlessly link these two worlds are a critical component of being “quantum ready.”

The Quantum Threat and the Race for Security

One of the most urgent drivers for becoming quantum-ready has little to do with optimization or simulation and everything to do with security. Much of the world’s current digital security infrastructure relies on encryption standards like RSA and ECC. The security of these methods is based on the fact that it is incredibly difficult for classical computers to solve certain mathematical problems, like factoring very large numbers.

However, a sufficiently powerful fault-tolerant quantum computer, running an algorithm developed by Peter Shor in 1994, could solve these problems with ease, rendering most of our current encryption obsolete. This would expose everything from financial transactions and government secrets to private communications and critical infrastructure.

This looming “Quantum Threat” has sparked a global effort to develop Post-Quantum Cryptography (PQC). PQC involves designing new cryptographic algorithms based on different mathematical problems that are believed to be hard for both classical and quantum computers to solve. Organizations like the U.S. National Institute of Standards and Technology (NIST) are in the final stages of standardizing these new algorithms. Becoming “quantum ready” from a security perspective means migrating critical systems to these new, quantum-resistant standards—a process that needs to begin now, long before a cryptographically relevant quantum computer arrives.

A Call to Action: Preparing for the Quantum Future

The emergence of quantum-ready hardware is not an event to be passively observed; it is a paradigm shift that demands active preparation across all sectors of society. Waiting until the technology is fully mature will be too late.

A. For Business and Industry Leaders: The time to start is now. Begin by educating yourself and your technical teams about the fundamentals of quantum computing. Identify the types of problems within your organization—optimization, simulation, or machine learning challenges—that are currently intractable and could be candidates for a quantum solution. Start small with pilot projects using the quantum cloud platforms offered by IBM, Google, Amazon, and Microsoft. This will help build institutional knowledge and position your company to be an early adopter rather than a late follower.

B. For Developers, Engineers, and Scientists: The tools to start learning are at your fingertips. Open-source software development kits like IBM’s Qiskit and Google’s Cirq allow you to write real quantum programs and run them on actual quantum hardware via the cloud. Understanding this new programming paradigm, which is fundamentally probabilistic and linear algebra-based, is a valuable skill for the future. Familiarize yourself with the different hardware types and their performance characteristics to understand which might be best suited for different applications.

C. For Governments and Academia: Fostering a robust quantum ecosystem is a matter of national strategic importance. This involves funding fundamental research, promoting public-private partnerships, and developing educational programs to create a quantum-literate workforce. Establishing standards for technology, security, and ethics is crucial to ensure that the development of quantum computing proceeds in a way that is safe, equitable, and beneficial to society.

The Dawn of a New Computation

The journey toward fault-tolerant quantum computing is a marathon, not a sprint. We are still in the early, formative days, grappling with noise, errors, and the immense challenges of scale. Yet, the progress is undeniable. The emergence of tangible, accessible, and increasingly powerful quantum-ready hardware marks a definitive turning point. We are moving beyond pure theory and are now building the foundational infrastructure for a new era of computation.

This hardware, in its various forms, represents more than just a technological achievement; it is a new lens through which we can view the universe and its complexities. It promises to unlock solutions to some of humanity’s most pressing problems, from curing diseases and designing sustainable materials to creating more intelligent AI and securing our digital world. The rise of quantum-ready hardware is not a distant forecast; it is happening now. The question is no longer if the quantum revolution will come, but whether we will be ready when it does.