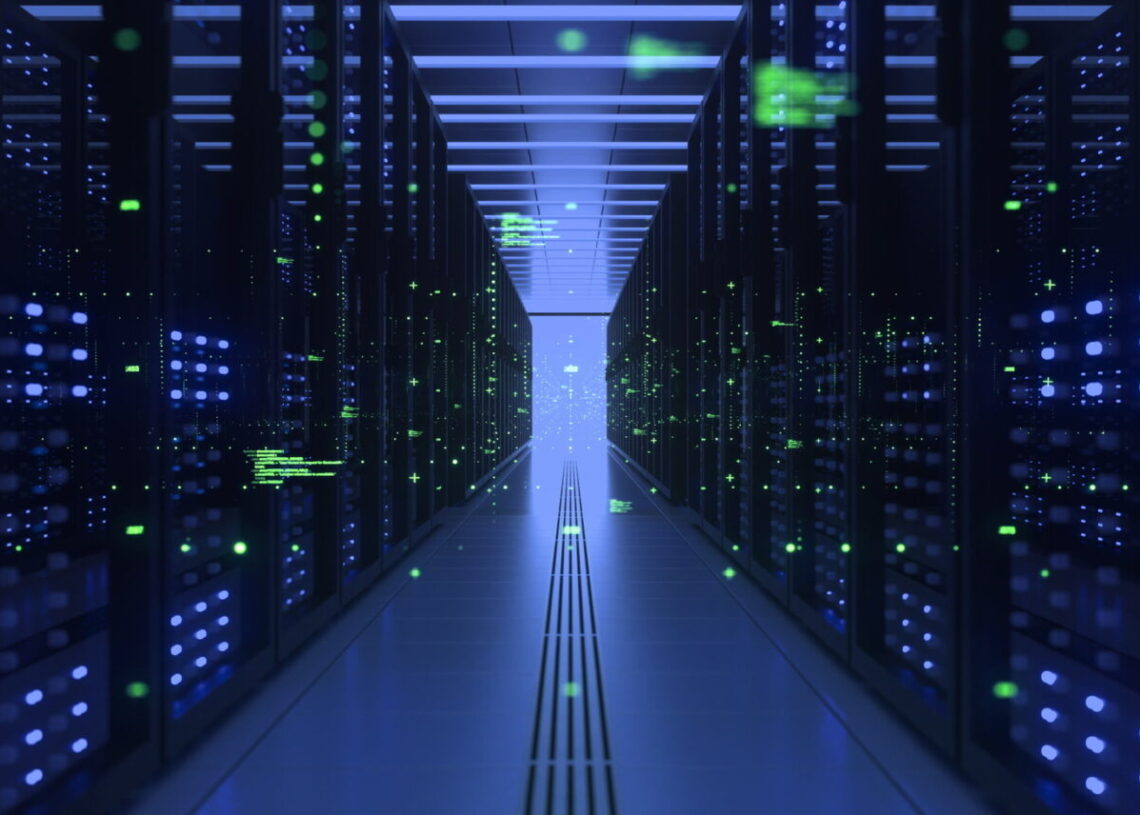

In the increasingly data-driven and digitally connected world, the demand for computing power and storage capacity has surged beyond traditional enterprise capabilities. This insatiable need has given rise to hyperscale data centers – massive facilities built by tech giants to power their global services, supporting billions of users and petabytes of data. However, simply having immense scale is not enough. The true competitive advantage lies in optimizing this scale for unparalleled efficiency. Hyperscale efficiency optimized represents the pinnacle of cloud infrastructure management, where every aspect of design, operation, and resource allocation is meticulously fine-tuned to maximize performance, minimize costs, and reduce environmental impact. This pursuit involves cutting-edge technologies, innovative operational practices, and a relentless focus on automation and sustainability.

From cooling systems to server utilization, network architecture to software-defined everything, hyperscale operators are constantly pushing the boundaries of what’s possible in large-scale computing. This article will delve into the critical factors driving the need for hyperscale efficiency, explore the key strategies and technologies employed to achieve it, detail the transformative benefits it delivers, address the inherent challenges in its implementation, and project its profound impact on the future of cloud computing and global digital infrastructure.

The Imperative for Hyperscale Efficiency

The relentless growth of data, user demand, and complex applications makes optimizing hyperscale environments not just a best practice, but an absolute necessity.

- Explosive Data Growth: The world generates an unprecedented amount of data daily from IoT devices, social media, e-commerce, and more. Hyperscale data centers must store, process, and analyze this data efficiently to derive value and insights.

- Massive User Bases: Global services like search engines, social media platforms, and streaming services serve billions of users concurrently, requiring immense and highly responsive infrastructure. Efficient scaling is paramount to maintain user experience.

- Energy Consumption and Environmental Impact: Hyperscale data centers consume colossal amounts of energy, primarily for powering servers and cooling them. Optimizing efficiency directly translates to reduced carbon footprint and alignment with global sustainability goals.

- Operational Costs: Energy is a significant operational expenditure for these facilities. Every percentage point of efficiency gain can result in millions, if not billions, of dollars in savings annually. Labor costs for manual operations also add up quickly.

- Performance and Latency Demands: Users expect instantaneous responses. Optimizing network paths, server loads, and data retrieval processes is critical to delivering ultra-low latency, especially for applications like real-time analytics, online gaming, and financial trading.

- Competitive Landscape: Major cloud providers (e.g., AWS, Azure, Google Cloud) operate at hyperscale. Their ability to deliver services reliably and affordably hinges on their efficiency, directly impacting their market share and profitability.

- Resource Scarcity: Factors like available land, access to clean energy, and water for cooling can be limiting. Maximizing resource utilization within existing facilities becomes crucial for continued expansion.

Key Strategies and Technologies for Optimized Hyperscale Efficiency

Achieving hyperscale efficiency involves a multi-pronged approach, integrating advanced hardware, intelligent software, and innovative operational models.

Infrastructure Design & Hardware Optimization

- Custom Hardware and Rack Designs: Hyperscalers often design their own servers, racks, and components (e.g., Google’s custom TPUs for AI, Facebook’s Open Compute Project) to precisely match their workloads and maximize power efficiency and density. This allows for removal of unnecessary components found in off-the-shelf servers.

- Advanced Cooling Solutions: Moving beyond traditional air cooling, hyperscalers employ liquid cooling (direct-to-chip, immersion cooling), evaporative cooling, and even direct free-air cooling in suitable climates to dramatically reduce energy consumed by chillers.

- Power Distribution Units (PDUs) and UPS Systems: Highly efficient and modular power distribution systems minimize energy loss during power conversion and distribution. Advanced Uninterruptible Power Supply (UPS) systems ensure continuous operation with minimal energy waste.

- High-Density Server Racks: Maximizing the number of compute units per square foot reduces the physical footprint and associated costs (real estate, construction, cooling infrastructure).

Software-Defined Infrastructure (SDI)

- Software-Defined Networking (SDN): Centralized control over network infrastructure allows for dynamic routing, traffic optimization, and efficient bandwidth allocation, reducing network bottlenecks and improving overall performance.

- Software-Defined Storage (SDS): Decoupling storage hardware from management software enables flexible, scalable, and cost-effective storage solutions that can be optimized for specific application needs (e.g., object storage, block storage).

- Virtualization and Containerization: Extensive use of virtual machines (VMs) and especially lightweight containers (like Docker and Kubernetes) allows for much higher server utilization by packing more applications onto fewer physical servers, reducing idle resources.

Automation and Orchestration

- Robotic Process Automation (RPA) for Operations: Automating repetitive operational tasks like patching, provisioning, and monitoring reduces human error, speeds up processes, and allows staff to focus on higher-value activities.

- Orchestration Platforms (e.g., Kubernetes): Automating the deployment, scaling, and management of applications across thousands of servers ensures efficient resource allocation and self-healing capabilities.

- AI/ML for Resource Management: Artificial Intelligence and Machine Learning algorithms analyze vast amounts of operational data to predict resource needs, optimize workload placement, dynamically adjust power consumption, and proactively identify potential failures. Google’s use of AI to reduce data center cooling costs is a prime example.

Workload Optimization

- Load Balancing and Traffic Management: Intelligently distributing incoming requests across numerous servers and data centers ensures optimal resource utilization and prevents individual servers from becoming overloaded.

- Microservices Architecture: Breaking down applications into smaller, independently deployable services allows for more granular scaling and efficient resource allocation, as only the necessary services are scaled up or down.

- Serverless Computing (FaaS): Running code only when triggered by an event, without managing servers, dramatically reduces idle compute resources and shifts operational burden to the cloud provider, resulting in extreme efficiency.

Energy Management and Sustainability

- Power Usage Effectiveness (PUE) Optimization: PUE is a key metric, with lower numbers indicating higher efficiency (e.g., a PUE of 1.0 means all energy goes to compute, 2.0 means half goes to overhead). Hyperscalers strive for PUEs approaching 1.0.

- Renewable Energy Sourcing: Investing heavily in renewable energy sources (solar, wind) for data center operations dramatically reduces the carbon footprint and contributes to sustainability goals.

- Waste Heat Recovery: Exploring innovative ways to capture and reuse waste heat generated by servers, for example, for district heating or other industrial processes.

Network Architecture

- Disaggregated Network Fabric: Separating network hardware from software control, often using white-box switches, allows for greater flexibility, cost reduction, and optimized network performance.

- High-Speed Interconnects: Employing cutting-edge fiber optics and high-bandwidth networking technologies within and between data centers ensures rapid data transfer and low latency.

- Content Delivery Networks (CDNs): Distributing content closer to end-users through CDNs reduces latency and offloads traffic from core data center infrastructure, improving overall efficiency.

Transformative Benefits of Hyperscale Efficiency

The relentless pursuit of optimized hyperscale efficiency yields a multitude of profound benefits that ripple across the entire digital ecosystem.

- Significant Cost Reduction: Lower energy consumption, optimized hardware utilization, reduced manual labor through automation, and efficient resource allocation directly translate to substantial savings in operational expenditure (OpEx) and capital expenditure (CapEx).

- Enhanced Performance and Responsiveness: Highly optimized infrastructure ensures ultra-low latency, faster data processing, and rapid response times for applications, leading to superior user experience and supporting real-time critical systems.

- Massive Scalability and Elasticity: The ability to seamlessly scale resources up or down to meet fluctuating demand, without significant performance degradation or cost spikes, provides unparalleled flexibility and agility to businesses.

- Improved Reliability and Resilience: Intelligent automation, redundant systems, and proactive monitoring detect and address issues before they impact services, leading to higher uptime and business continuity.

- Reduced Environmental Impact: Lower energy consumption, combined with a higher reliance on renewable energy sources and innovative cooling, drastically shrinks the carbon footprint of digital services, contributing to global sustainability efforts.

- Accelerated Innovation: By providing a highly efficient and scalable foundation, hyperscale clouds enable developers and businesses to innovate faster, experiment with new technologies (like AI/ML), and bring new products and services to market quicker.

- Competitive Advantage: Cloud providers that achieve superior hyperscale efficiency can offer more cost-effective services, better performance, and greater reliability, attracting and retaining more customers.

- Operational Simplicity (for Users): While complex for the providers, the efficiency at hyperscale allows end-users of cloud services to abstract away infrastructure complexities and focus purely on their applications and business logic.

Challenges to Achieve and Maintain Hyperscale Efficiency

Despite the compelling benefits, the journey to optimized hyperscale efficiency is fraught with significant technical, operational, and financial challenges.

- Immense Complexity: Managing millions of servers, petabytes of data, and global networks, all while optimizing every variable, creates an unparalleled level of complexity that requires sophisticated automation and AI.

- High Initial Capital Investment (CapEx): Designing and building hyperscale data centers with custom hardware, advanced cooling, and redundant power systems requires enormous upfront capital expenditure.

- Talent Acquisition and Retention: There’s a severe shortage of engineers and operations staff with the specialized skills required to design, build, and maintain hyperscale infrastructure and its complex optimization systems.

- Vendor Lock-in (and Avoiding It): While hyperscalers often develop their own solutions, clients leveraging specific cloud services can face vendor lock-in, making migration difficult. This influences how providers design for efficiency.

- Balancing Performance and Cost: Achieving optimal efficiency often means making trade-offs between absolute performance, redundancy, and cost. Finding the right balance for diverse workloads is an ongoing challenge.

- Rapid Technological Evolution: The pace of change in hardware (CPUs, GPUs, storage), networking, and software technologies is incredibly fast. Hyperscalers must constantly innovate and upgrade to maintain their efficiency edge.

- Security at Scale: Securing vast, distributed infrastructure against a continuous barrage of cyber threats requires sophisticated, automated security measures and constant vigilance.

- Environmental Regulations and Public Scrutiny: As energy consumption grows, hyperscalers face increasing regulatory pressure and public scrutiny regarding their environmental impact, necessitating transparent sustainability efforts.

- Global Supply Chain Dependencies: Sourcing components for custom hardware and building materials for massive data centers relies on complex global supply chains, which can be vulnerable to disruptions.

- Legacy System Integration: Even hyperscalers often deal with older systems that need to be integrated or phased out, adding layers of complexity to efficiency optimization efforts.

The Future of Continuous Optimization and Sustainable Scale

The trajectory of hyperscale efficiency points towards a future defined by perpetual optimization, deep integration of AI, and an unwavering commitment to sustainable growth.

- AI/ML as the Core Optimizer: Artificial intelligence and machine learning will move beyond assisting and become the primary drivers of hyperscale efficiency, autonomously managing resource allocation, predicting failures, optimizing energy consumption, and even dynamically adjusting infrastructure configurations in real-time.

- Full Automation and Lights-Out Operations: Data centers will increasingly move towards “lights-out” operations, where human intervention is minimized, with automation handling routine tasks, maintenance, and even complex incident response.

- Advanced Materials and Quantum Computing: Research into new materials for heat dissipation, more efficient processors (e.g., neuromorphic chips), and the eventual integration of quantum computing will further revolutionize compute efficiency.

- Edge-Cloud Synergy: Hyperscale data centers will work in even closer synergy with edge computing, intelligently offloading real-time processing to the edge while retaining the core for large-scale data analytics and global services.

- Renewable Energy Dominance: Hyperscalers will accelerate their transition to 100% renewable energy sourcing, potentially even becoming net energy producers for surrounding communities.

- Circular Economy for Hardware: Greater emphasis will be placed on extending the lifespan of hardware, recycling components, and developing sustainable decommissioning processes to minimize electronic waste.

- Specialized Hardware Acceleration: Continued proliferation of purpose-built hardware accelerators (e.g., for AI/ML, cryptography, networking) will drive significant efficiency gains for specific workloads.

- Self-Healing and Autonomous Infrastructure: Infrastructure will become increasingly self-aware and self-healing, using AI to detect, diagnose, and resolve issues without human intervention, ensuring continuous optimization and reliability.

- Global Resilient Networks: The underlying network infrastructure will become even more resilient, leveraging technologies like software-defined WAN (SD-WAN) and advanced optical networks to ensure efficient and robust data transfer globally.

Conclusion

Hyperscale data centers, though often unseen, are the indispensable engine powering our digital world. Their ability to deliver services at an unprecedented scale is only possible through a relentless and sophisticated focus on efficiency. From innovative cooling techniques and custom hardware to the pervasive application of AI-driven automation and software-defined everything, hyperscale operators are constantly redefining what is achievable in terms of performance, cost-effectiveness, and sustainability.

The journey towards optimized hyperscale efficiency is an ongoing one, marked by continuous innovation and a commitment to pushing technological boundaries. As the demand for digital services continues its exponential climb, the mastery of hyperscale efficiency will remain the cornerstone of competitive advantage for cloud providers and the silent enabler of global digital transformation. The future promises even more intelligent, automated, and environmentally conscious hyperscale environments, solidifying their role as the invisible, yet immensely powerful, backbone of our connected planet.